EMOROBCARE: A Low-Cost Social Robot for Supporting Children with Autism in Therapeutic Settings

We introduce a software architecture and system design for EMOROBCARE, an affordable, open-source social robot created to assist therapists in interventions for children with Autism Spectrum Disorder (ASD) Level 2.

The Challenge: Accessibility in Robot-Assisted Therapy

Children with ASD Level 2 often face significant challenges in social communication and behavior, requiring highly structured and personalized therapeutic interventions. While social robots have proven to be effective “non-judgmental” partners for improving skills like emotion recognition and joint attention, their widespread adoption is hindered by several barriers:

- High Costs: Many existing clinical-grade robots are prohibitively expensive for common use.

- Proprietary Ecosystems: Closed software limits the ability of researchers and therapists to customize or extend the robot’s capabilities.

- Need for Natural Interaction: There is a constant demand for robots that can engage in triadic interactions (therapist-child-robot) while remaining engaging and non-threatening.

Methodology: Modular Software and Low-Cost Hardware

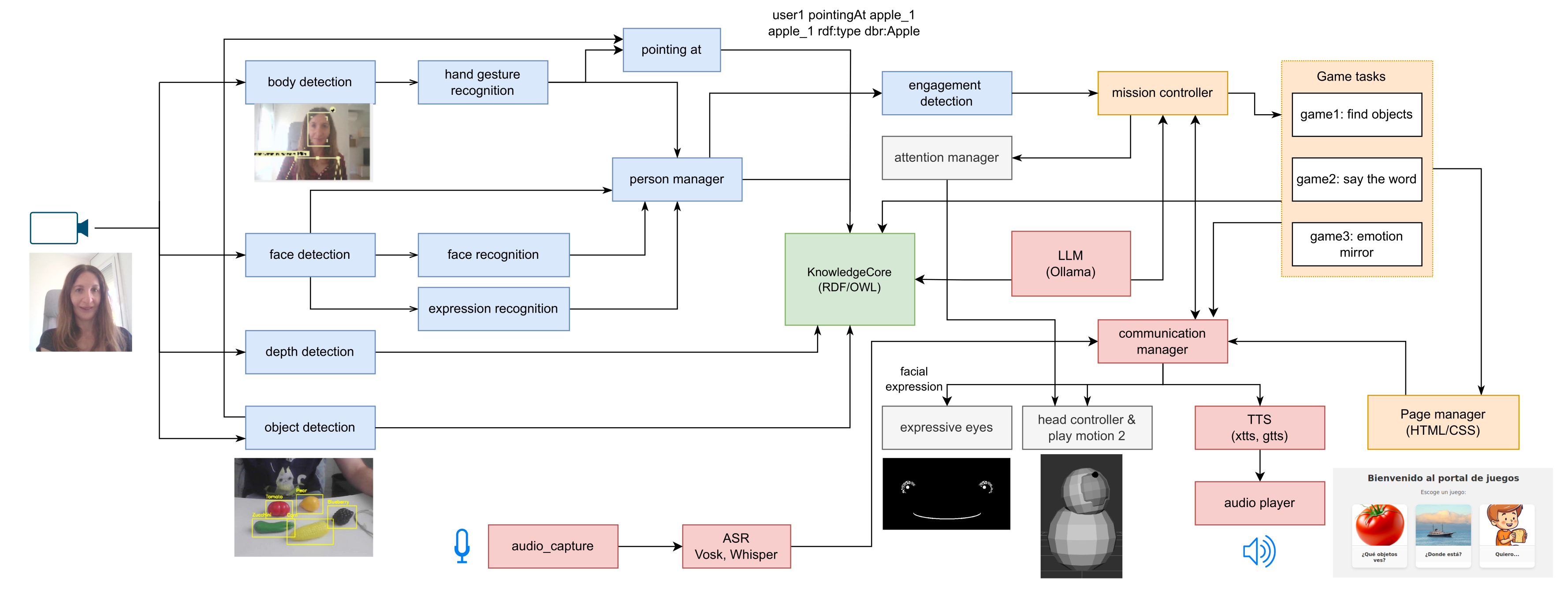

The EMOROBCARE project utilizes a multidisciplinary approach to build a modular, open-source system. The architecture is currently tested in a virtual simulation environment (Gazebo) before its scheduled physical deployment in 2025.

- Hardware Design: The robot is 30 cm tall with a 3-DOF head, a screen for a cartoon-like expressive face, and a Jetson Nano for perception.

- Software Stack: Built on ROS 2, the system extends the ROS4HRI framework to include social perception, symbolic reasoning, and LLM-based dialogue management.

Perception Modules

- Vision: Combines YOLOv8 and YOLO-world for object detection, alongside MiDaS for depth estimation and a custom pointing gesture detection module to interpret where a child is looking or pointing.

- Communication: Integrates Whisper and Vosk for speech recognition (ASR) and Coqui XTTS for expressive, emotional text-to-speech (TTS).

Dialogue & Behavior

Uses Large Language Models (LLMs) like Llama 3.2 to generate supportive, age-appropriate responses, which are then coordinated by a mission controller that manages interactive “serious games”.

Results and Impact

The research demonstrates the feasibility of integrating advanced AI tools (LLMs and open-vocabulary object detectors) into a low-cost robotic platform.

Vision Performance: Testing showed that while task-specific models (YOLOv8) are more precise (0.894 F1-score), open-vocabulary models (YOLO-world) provide the flexibility needed for uncontrolled therapy environments, especially when prompts include descriptive colors.

Communication Accuracy: The system identified Whisper as a superior transcription tool for child speech compared to Vosk, which is critical for maintaining the flow of therapy.

Therapeutic Potential: A prototype game, “Finding Objects,” was successfully simulated, demonstrating how the robot can lead a session by detecting objects, asking questions, providing multimodal feedback (gestures + facial expressions), and sharing fun facts via the LLM.

Future Impact: By prioritizing affordability and open-source modularity, EMOROBCARE aims to lower the entry barrier for specialized clinics to use social robotics as a standard tool for ASD therapy.