A Road Map for Responsible Robotics: Promoting Human Agency and Collaborative Efforts

As robots increasingly integrate into human life, how do we ensure they co-evolve with society, promoting human agency and values? This roadmap outlines the key priorities.

Moving Beyond Code: A Roadmap for Responsible Robotics

As robots transition from the controlled floors of factories to the unpredictable spaces of our daily lives, the question of how to ensure they behave “responsibly” has become a matter of global importance. Our new academic article, written in collaboration with more that 30 scholars, “A Roadmap for Responsible Robotics,” provides a comprehensive guide for researchers, policymakers, and industry leaders to navigate this complex sociotechnical landscape.

The Core Challenge: Why Robots Are Not Just Physical AI

One of the central arguments of the article is that robotic systems are often misunderstood as merely physical embodiments of Artificial Intelligence. However, the authors emphasize that “responsible robotics” is not just a straightforward extension of “responsible AI”.

Robots present unique challenges because they are physically embodied and autonomous. Unlike a chatbot or an image generator, a robot moves through physical environments, exerts kinetic force, and interacts in real-time with humans, animals, and the natural world. This physicality introduces immediate safety risks and influences human perception; people often treat moving, goal-oriented machines as “quasi-social actors,” which can lead to issues of deception or inappropriate emotional bonding .

Methodology: An Interdisciplinary Blueprint

The roadmap is the result of the Dagstuhl Seminar on Roadmap for Responsible Robotics, held in September 2023 in Germany. The methodology relied on intensive, interdisciplinary collaboration between experts in robotics, computer science, philosophy, and social sciences. By bringing these diverse perspectives together, the participants identified the specific values that must be upheld as robots become integrated into society.

Key Results: A Human-Centric Framework

The article establishes that responsibility in robotics is fundamentally about human responsibility, not the attribution of accountability to machines. It defines a “Responsible Robotics” framework centered on several core values:

Dignity and Autonomy: Robots should respect the inherent worth of all humans and support their ability to act according to their own interests.

Privacy and Safety: Beyond data protection, robots must respect the “spatiotemporal” privacy of physical spaces and ensure they do not threaten human health or well-being.

Sustainability: Responsibility includes environmental impacts, such as the sourcing of materials and the energy consumption of robot software.

Predictability and Transparency: Stakeholders need to understand why a robot behaves the way it does and be able to anticipate its movements.

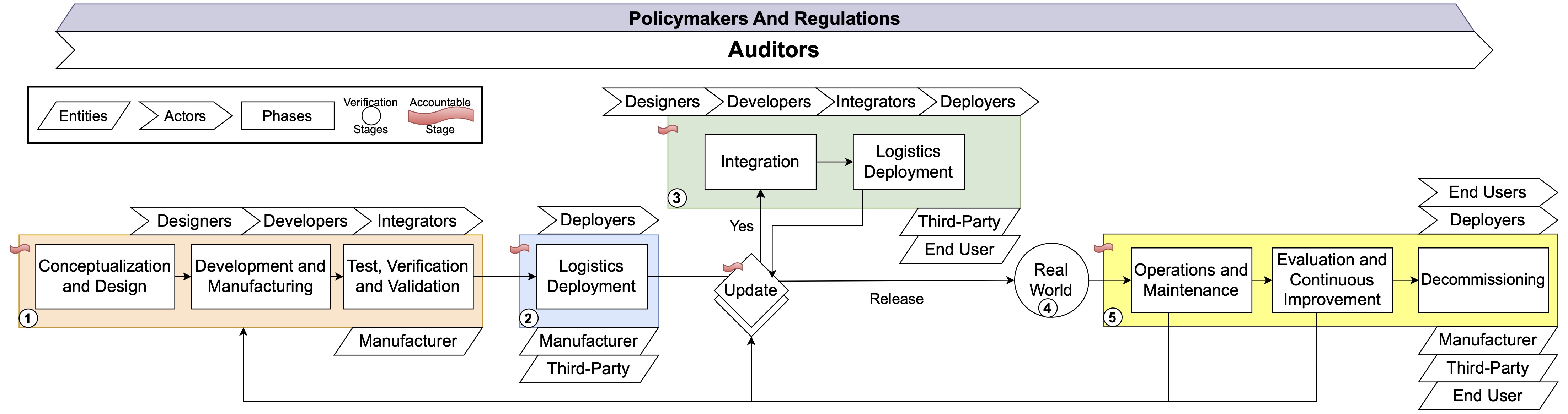

A Lifecycle Approach to Responsibility

A major contribution of the roadmap is its insistence that responsibility must span the entire lifecycle of a robot. This process is structured into five distinct phases:

Design and Manufacturing: Addressing ethical issues and safety standards from the start.

Preparation for Deployment: Rigorous verification by independent organizations.

Updates: Ensuring accountability when software or hardware is modified after release.

Real-world Operation: Managing how the robot behaves in uncontrolled environments.

Post-deployment, Maintenance and Decommissioning: Considering the long-term social and environmental impacts.

The Roadmap: Closing the Gaps

Finally, we identify several “gaps” that must be filled to achieve responsible robotics, categorized by urgency:

Urgent Needs: Establishing progress indicators, creating interdisciplinary teaching curricula, and operationalizing concepts like “explainability” in physical robots.

Medium-term Goals: Developing systems for reporting irresponsible incidents and creating “testbeds” to assess interaction-based harms.

Long-term Visions: Establishing international coordination agencies and clarifying the legal relationship between robotic agency and responsibility.

In particular, we identify several urgent gaps:

- Identifying progress indicators for Responsible Robotics (Who: Researchers (social science), Standards committees)

- Requirements Engineering for Responsible Robotics (Who: Researchers (inter-disciplinary, Software Engineering))

- Teaching Responsible Robotics (Who: Educators, Researchers)

- Operationalizing explainability / predictability (Who: Engineers, Philosophers)

- Reporting irresponsible incidents (Who: Legislation, Regulation, Professionalization)

- Responsible technological intervention in systemic problems (Who: Policy experts, Regulators, Citizens)

- Planning with interacting values in uncertain situations (Who: Researchers (CS, AI))

- Enabling the second-hand robot market (Who: Insurance, Regulators, Business)

- Educating users to live with robots (Who: Researchers (HRI, Education, Psychology))